Workshops 2017

Discover the latest tools and methodologies available on the market.

5 sessions and a total of 20 workshops for you to access to detailed demonstrations and discussion with supplier executives.

ProLab, a unique and innovative module for project traceability and system validation

Objectives

Traditional laboratory software implementation and validation is a time consuming and costly paper based process that can be plagued with traceability errors and risks. Paper-based computer system validation requires users to dedicate a large amount of effort to create and maintain requirements, define test protocols and perform test execution.

ProLab, the project and validation management module from AgiLab Science platform, enables a 100% paperless and automated process from implementation to validation. ProLab manages everything from system and user requirements, specifications, tests feedbacks, qualification protocol scripts (IQ, OQ, PQ) and data sets, execution result capture, approval and sign off.

Using a QC LIMS implementation case study, AgiLab will demonstrate the significant benefits gained by organizations using this unique paperless approach for project management and system validation.

Session Learnings

Workshop attendees will learn how to improve deployment efficiency, reduce project cost and increase the likelihood of project success by:

- Reducing implementation and configuration project risk

- Facilitating collaboration & project management

- Simplification of user requirements capture to delivery

- Reducing validation cycle time and complexity

- Increased compliance with tracking protocol executions and approvals

Digital Data Convergence, regulatory audit support and the development of PAT and QbD

Objectives

For pharmaceutical and health-care companies the ultimate objective is the real-time release of products through the design and development of processes that can consistently ensure a predefined quality output.

Plantex is a digital technology platform and SaaS data science application allowing for a holistic approach to necessary and critical process and quality data.

Plantex can drastically cut back the time necessary to understand and verify the critical process parameters and the effect on critical quality attributes. Plantex will speed-up the development of- or the continues improvement of an existing- Process Analytical Technology (PAT) system and/or Quality by Design (QbD) framework.

Session Learnings

Workshop attendees will learn how to transform complex data into actionable knowledge to create tangible and profitable business actions. We provide you with new perspectives that help you in making complex decisions in challenging situations combining problem-solving and decision-making strategies.

NevisData: Hardware-Cloud Software hybrid Solution or how to control the variable that affects the results

Objectives

Nowadays the laboratories that have already computerized their processes are unable to automatically record, store and associate to the samples the time/location data (collection, delivery and waypoints) and environmental data (temperature and humidity normally) of the specimens to analyze during the periods of transport, storage, conditioning, processing and sampling. These data, which do not correspond to the analytical results, are important in the final phase of the laboratory process because they can affect the quality and accuracy of these and are basic to see the big picture in the operation of the entire laboratory process.

Session Learnings

- Importance for the lab of registering systematically all the key parameters of the samples to be analyzed from the pre-sampling to the discard of the sample.

- Solutions to perform the control and link between the time and environmental parameters and the samples to be analyzed within LIMS software.

Present and Future Collaborative Innovations Within Drug Discovery Informatics

Objectives

Collaborative Drug Discovery’s CDD Vault software (Activity & Registration, Visualization, Inventory, and ELN) provides an easy and secure way for the collaborative sharing of research data and workflows. Web-based platforms are a natural fit for collaborations due to the economic, architectural, and design benefits of a single platform that transcends any one organization’s requirements.

Additionally, futuristic collaborative drug discovery informatics innovations (such as open-source descriptors, models, and BioAssay Express) are used not only within CDD Vault, but also with other commercial-, academic-, or community-built software tools. Together, we will explore the future of successful scientific collaborations.

Session Learnings

- Experience how collaborative technologies apply to drug discovery project teams.

- Learn to interactively mine data for hits, outliers and relationships.

- View cross discipline results that highlight structure-activity relationships.

- Understand how to add and generate summary information, normalized activity, and compound selectivity.

- Collaborate on notebook entries, share annotations and mentor teammates in real-time.

- Watch technology convert human-readable assay descriptions into computer-readable information.

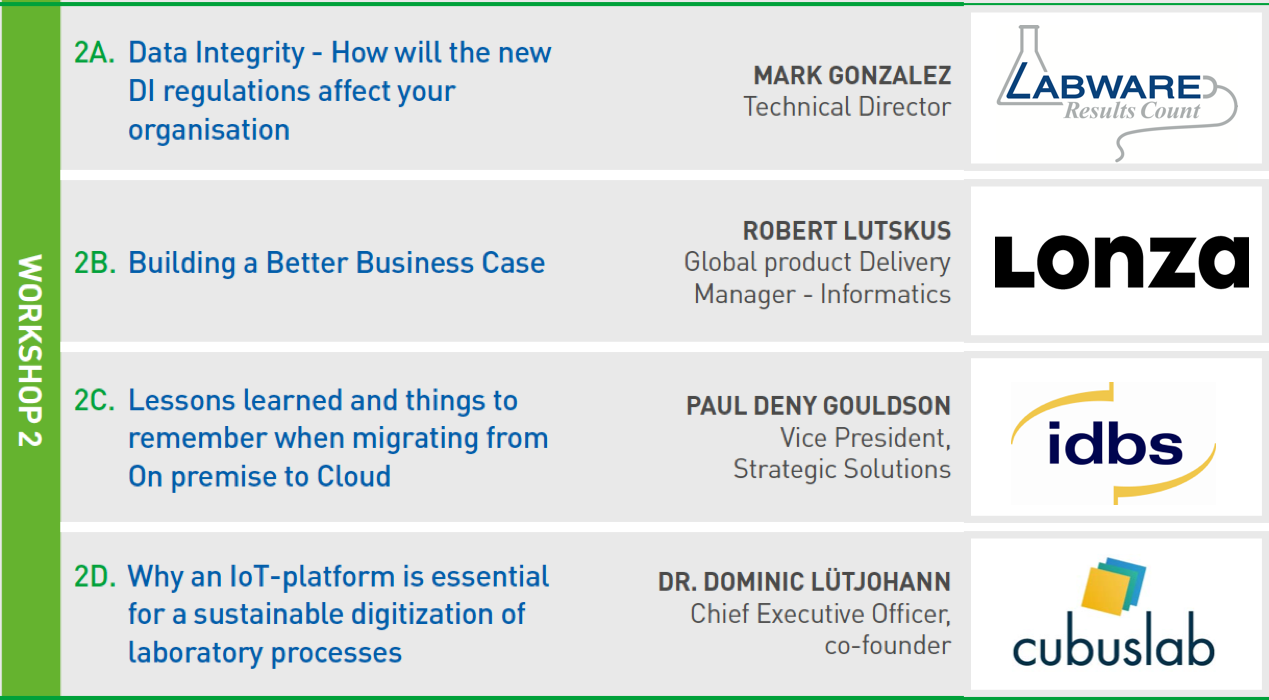

Data Integrity - How will the new DI regulations affect your organisation and how using LabWare LIMS & ELN can assist you with these

Objectives

The workshop will discuss the current directives with regards to Data Integrity and how the possible regulation could affect scientific information systems for capturing all data at source. LabWare will also outline their strategic approach and present solutions to address the possible regulations.

Session Learnings

-

What action to take

-

How to adapt to the new regulations

-

How to plan for the new DI Regulations

Building a Better Business Case

Objectives

Transitioning from a paper-based or legacy electronic system requires multiple levels of approval from an organization. The process of justifying the cost, selecting the right system and ensuring the approval of your project is tied to the business case you can develop to support it. This session will discuss the approaches to the business case, how to ensure the data backs up any assumptions and selecting the system that fits the needs set forth by the organizations.

Session Learnings

- Attendees will learn about the process and tools available to develop a business case.

- Attendees will see examples of successful business cases used in the justification of MODA EM, a best in class enterprise Environmental Monitoring Software.

Lessons learned and things to remember when migrating from On premise to Cloud

Objectives

Many companies are migrating their on premise IT infrastructures to the cloud, to take advantage of lower costs and better overall support. This journey, however, is not as simple as flicking a switch and everything is there. Many factors impact the speed and stages that a migration should go through. They include, but are not limited to:

- application design and support for web

- validation of systems

- integrations to other cloud and on premise system

- security of communications, data and users

- IT policies

- IP policies

We will go over how a number of these hurdles can be identified and managed, using various use cases of IDBS moving customers from on prem to The Cloud.

Session Learnings

- What are the starting list of barriers and solutions when moving to IT systems

- Using case studies see how others have accomplished the move

- An opportunity to discuss real world issues with your peers.

Why an IoT-platform is essential for a sustainable digitization of laboratory processes

Objectives

In laboratories worldwide, a growing number of processes are being digitalized to improve and accelerate daily laboratory work: documenting and transferring process data and experiment results from multiple devices to ELN, LIMS or CDS system.

Current approaches of device integration into workflows usually lack one or more crucial requirements: compatibility, scalability, ease-of-use for scientists, or the integration depth with existing systems, especially in large scale enterprise scenarios or the cloud.

Only an easy to use, vendor-neutral IoT platform, which connects all laboratory devices in a unified way can tackle this challenge, and enables researchers to virtually access all their relevant data from anywhere at any time.

Session Learnings

In this workshop, attendees will learn how flexible and easy an IoT-Platform can solve the challenges of transforming current processes into digital, connected lab workflows. From the very beginning, the gains are visible: higher quality of documentation and reduced efforts which directly turn into savings for businesses.

- An IoT platform provides real-time data for improved quality and automated documentation.

- An IoT platform allows enterprises to achieve holistic data integrity throughout lab processes.

- An IoT platform seamlessly connects devices, people and data.

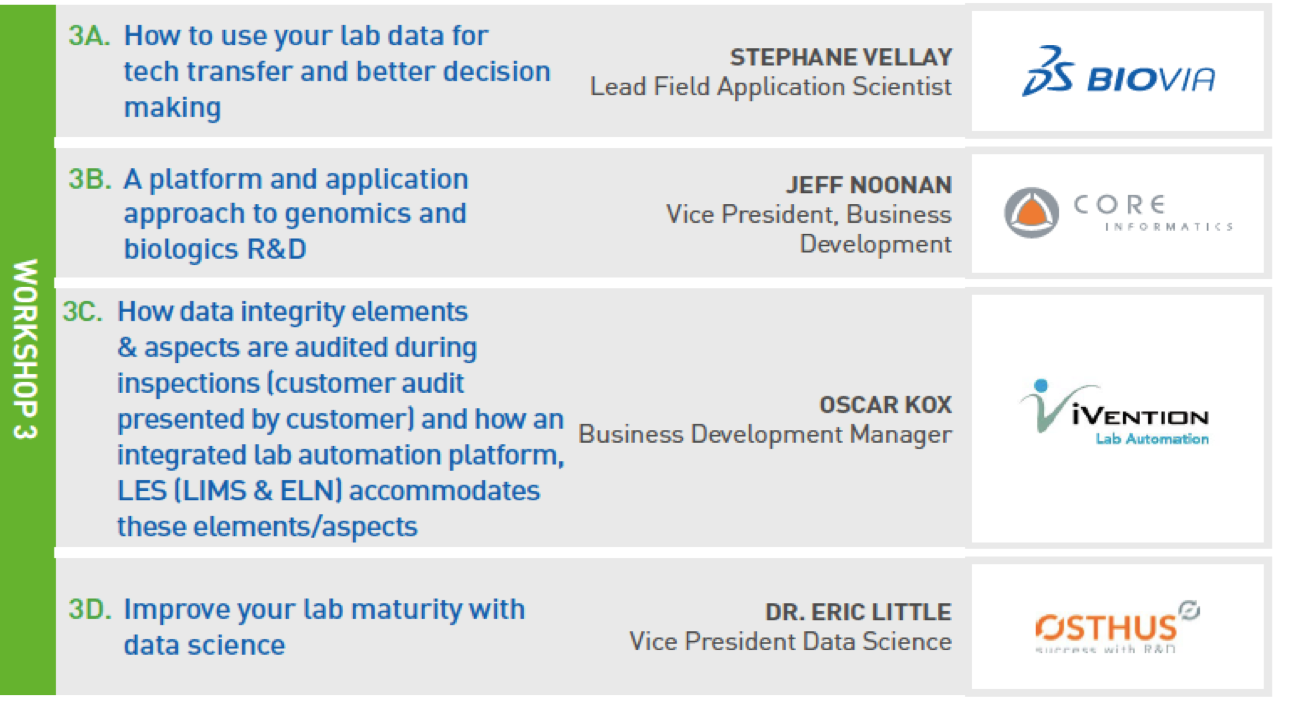

How to Use Your Lab Data for Tech Transfer and Better Decision Making

Objectives

The lab creates an abundance of data that is of great value to organizations for improving the quality of processes and products. In many organizations the access, aggregation and contextualization of data still requires significant effort.

These tasks are non-value adding and prevent staff from focusing on science and decision making.

When data is used for making GMP relevant decisions, proofing data quality and integrity can be an issue. The integration of the validation-ready BIOVIA Discoverant solution with Lab Informatics systems reduces time consuming and error-prone work and delivers insightful reports such as, stability reporting, without the need for data duplication.

Session Learnings

See how

- Your current lab data can be leveraged in an automated way for better process and product insights

- You can improve process design by identifying the critical process drivers

- late development can transfer knowledge with manufacturing

- laboratory and manufacturing data can be gathered on demand and contextualised with the process itself

Depending on time and number of attendees, live demo may be replaced by an interactive hands-on session.

A platform and application approach to genomics and biologics R&D

Objectives

Implementing genomics and biopharma R&D software can be challenging and time-consuming. This workshop will describe how pre-built applications from the Platform for Science Marketplace can simplify the implementation of these workflows. The apps from this rapidly expanding ecosystem benefit from capabilities of Core’s Platform for Science, including Core Connect, which streamlines platform integration with other software systems (via a standards-based API).

Session Learnings

- Overview of the NGS and Sanger sequencing app solutions that enable genomics data tracking from sample accessioning through sequencing

- Overview of biologics app solutions which support a variety of biopharma R&D processes including, but not limited to, registration, screening, cell culture, and inventory management.

- Overview of Core Informatics’ latest product Core Connect.

How Data Integrity elements & aspects are audited during inspections (Customer Audit presented by customer) and how an integrated Lab Automation platform, LES (LIMS & ELN) accommodates these elements/aspects

Objectives

The workshop provides a platform to discuss the regulatory focus with regards to Data Integrity. Delegates will get inside information from a large PRE-CLINICAL organisation using a Cloud based Lab Automation platform (iLES) about a recent Audit by one of the TOP 3 Pharma to be granted a large contract. How can a Lab Execution System, One integrated Cloud Based platform with LIMS, ELN, LES and (S)DMS functionality provide the premise for these audits. A new approach in Lab Automation vs the classic implementations. The workshop includes functional demonstrations.

Session Learnings

Workshop attendees will learn how to use a Cloud Based Execution Platform with LIMS, ELN and Execution functionality to support a complete Lab Automation process and how this is audited by a Top 3 Pharmaceutical Company.

The workshop will have the following additional content:

- GAMP Auditor, Mr. Frans Leijse, will explain the impact of the new ruling and audits regarding data integrity

- Large Pre-Clinical CRO will present a recent audit on their Lab Automation Platform

- Salesforce will attend this workshop as “special supporting guest” on Cloud Systems for Life Science

- iVention will present unique functional areas of the iLES Cloud Lab Automation platform

Improve Your Lab Maturity with Data Science

Objectives

We will discuss the move to truly digital laboratories. Currently many labs still rely heavily on paper, or, at best, paper-on-glass approaches, where ELNs or other tools provide paper-like data that can be utilized more easily and with more control.

However, the move to purely digital laboratories is the next advancement, driven in part by the Internet of Things. As devices become smarter and produce data about themselves, it will become increasingly important for scientists to take advantage of more powerful machines, as well as more powerful data techniques, including: semantic technologies, analytics, machine learning, natural language processing and trending/alerting.

Session Learnings

- The current state of laboratory data (paper-to-digital) and trends that show how this data is changing

- What is the Internet of Things and how will it impact laboratories

- What we mean by a truly “digital lab” and how to get there

- Understanding of Semantic Technologies and how they can help move to a digital lab

- Understanding of Data Analytics and how they can help move to a digital lab

- Understanding of what is meant by Big Analysis as data science

Improving data integrity with end-to-end integration of ERP, LES, LIMS and analytical instruments with the AnIML data standard

Objectives

One of the challenges in laboratory data management is integration across systems and across instruments. Today, most instruments produce data in their own proprietary formats. This leads to major difficulties to achieve integration and data integrity.

This workshop demonstrates how vertical interfaces and standard formats such as AnIML can be used to create an end-to-end integration: A measurement order may start at an ERP system (such as SAP), be orchestrated through a LIMS, passed to instruments for measurement, and reported all the way back up.

In live sessions, SAP-to-LIMS interfacing, as well as instrument interfacing will be demonstrated. The AnIML format will be used to connect five different instrument types to the system.

Session Learnings

Participants will experience first-hand:

- How AnIML can be used to connect multiple instruments of different using a single interface

- How LIMS systems can be connected to ERP systems to achieve an end-to-end integration

- How the combination of such integrations and open standards can enable full traceability and data integrity.

- How AnIML data can be used on the desktop, the web and in the cloud

Data Integrity? How to get the most out of your Empower data; have secured your MS Excel spreadsheets; and archived all your electronic data!

Objectives

Everybody has analytical data, how do you get the important information out your Empower database, and identify the critical information?

Securing your MS Excel spreadsheets? What solution does Waters provide to help you securely access and use your MS Excel spreadsheets?

Archiving your electronic data: Do you follow the guidance in archiving your data?

Session Learnings

- EDS365, extracting Empower data to view and access your important information

- Securely access and use your MS Excel spreadsheets, using NuGenesis LMS

- Archiving your electronic data; vendor independent capturing of your printouts and raw data, indexed and ready for retrieval.

Dealing with a lot of paperwork? Learn how Laboratory Informatics simplifies the complexity of compliance with data integrity.

Objectives

Industry faces the everyday challenge of managing cost efficient operations, producing high quality, safe products and having visibility of the operations and data.

Ensuring the cost of quality adds value to the operations, becoming lean, streamlined, improving process and complying with the industry regulations are at the top level priorities.

Session Learnings

In this session, Abbott Informatics team will discuss how laboratory informatics simplifies the complexity of compliance with data integrity:

- Laboratory Informatics and why it matters

- Adapting your delivery approach to leverage the power of these trends

- The importance of data transmission and fidelity, its meaning and effect of the data.

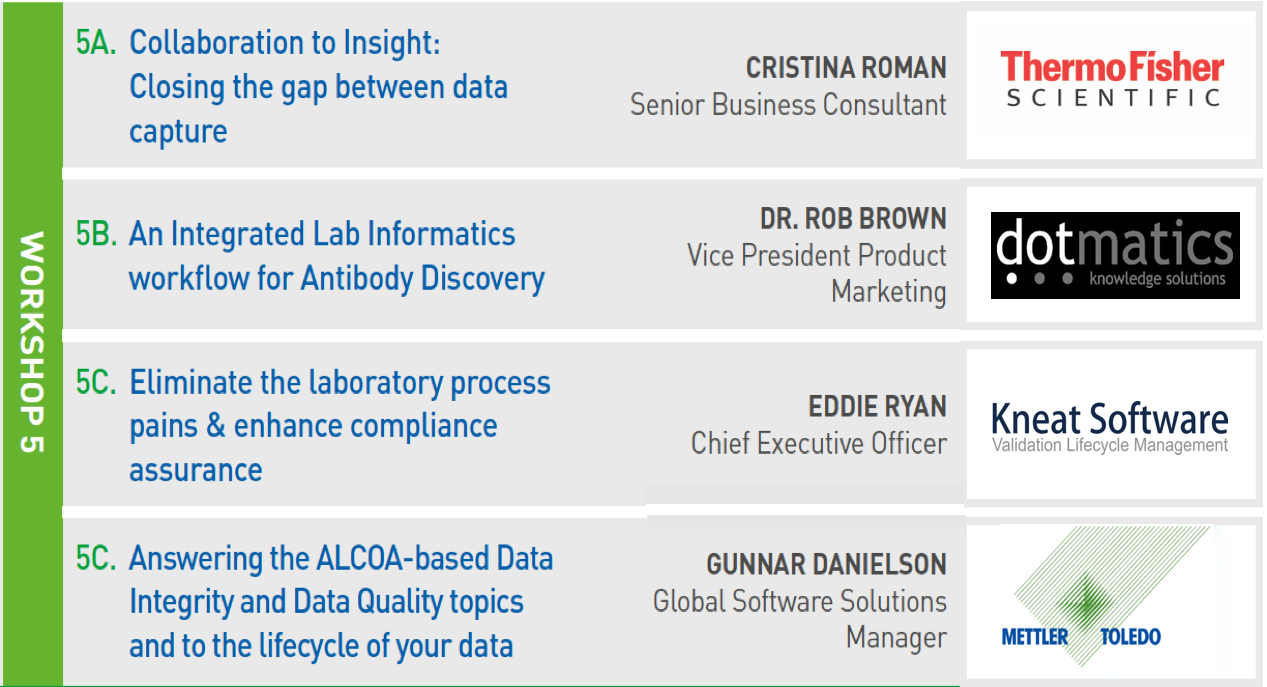

Collaboration to Insight: Closing the gap between data capture and real time decisions

Objectives

The promise of electronic lab notebooks has always been to capture data so that it can be used to make better decisions. Fulfilling this promise has been a challenge since the same flexibility required to make an ELN system easy to use has made it hard to organize the data for decision support.

However, recent advances in Big Data technologies have made it possible to reshape and organize data in ELN systems in situ without substantial data warehousing projects. Once in place, the reshaped data is ready-made for exploration, analysis and discovery with applications like TIBCO Spotfire.

Session Learnings

- Introducing our new PerkinElmer Signals Notebook; learn how this can be used in a collaborative framework whether you are capturing Chemistry, Biological or Formulation data.

- Had enough of all the data silos in your organization: We will showcase our Attivio technology which connects various data sources and allows you to answer questions you never dared to ask before.

- To finalize our session we will showcase how that information from numerous data systems can be combined to generate dynamic reports using the TIBCO Spotfire platform.

Unlock the value of your data with integrated lab and data management combined with cloud capabilities.

Objectives

Thermo Fisher Scientific provides a complete integrated lab & data management solution, comprising LIMS, CDS, SDMS and Procedural ELN. This workshop will discuss the importance of these multiple capabilities, explore the benefits of a complete, integrated solution and demonstrate some common workflow examples.

IOT, cloud computing and mobile solutions are becoming commonplace in today's homes - we will show how Thermo Fisher applies these technologies to enable a smarter lab.

Session Learnings

- Discover a complete lab software solution comprising LIMS, CDS, SDMS, and LES (Procedural ELN).

- Understand the benefits of such an integrated solution:

- Increased efficiencies and automation through secure data and information transfer.

- Reduced costs and risks from deploying a complete single vendor solution.

- Achieve secure, mobile access to data and instruments via Thermo Fisher Cloud.

An Integrated Lab Informatics workflow for Antibody Discovery

Objectives

Antibodies have become an important and growing part of the discovery portfolio of many companies. However, the informatics systems that are used to capture the experiments and associated results are often fragmented and involve manual process

In this workshop, we will demonstrate the use of the Dotmatics solution for antibody discovery that provides a fully integrated workflow that uses the Studies Notebook and Bio Register to capture experiments, processes, entities and tests.

Session Learnings

Participants will be able to:

- See the value of an integrated antibody workflow.

- Understand how this can be configured to their organization’s process and environment.

Eliminate the Laboratory process pains & enhance compliance assurance. Through electronic Lab validation enabling significant productivity gains, cycle time improvements and resource savings.

Objectives

Increasing levels of Validation activities and higher regulatory expectations across laboratory processes are increasing the demand for compliant Validation services.

The laboratory process for equipment management and status tracking is a manual paper based process, with the following issues:

- High level of effort/ time to create, execute and maintain lab Validation records i.e. requirements, protocols, test scripts, risk assessments, trace matrices

- Cross lab and cross sites inconsistencies

- No real time viewable lab equipment/ systems status index

- No standardized/ structured new equipment process templates/ workflows

- No efficient process to leverage previous information for current tasks

- No best practice across projects and sites

- Accurate Metrics not available

- Cumbersome record storage and retrieval

- Limited visibility both globally and across sites

- Data integrity concerns i.e. ALCOA principles

Session Learnings

Using an actual client example the workshop will explain:

- from existing paper to a future e-validation solution and the steps to justify, plan implement a lab e-validation system

- Streamline and structured process to identify/ track/ quality new equipment.

- Comprehensive dashboard with visibility to real-time data for lab equipment & systems

- Ability to share and leverage information across labs/ sites

- Ensure compliance with data integrity requirements

- Provide easy controlled lab records e-access and user friendly metrics

- Ability to verify user training before equipment/ system use

Data Governance: Answering the ALCOA-based Data Integrity and Data Quality topics and to the lifecycle of your data

Objectives

To ensure Data Quality: the SOP steps, calculations, results, and records are managed automatically and electronically.

To ensure Data Integrity: complete data must be fully traceable and follow the guidance of ALCOA. Start at the beginning – typically at the balance (all benchtop instruments) – with data integrity and data quality from the source. Self-documenting labs begin on the bench.

The industry trend has changed from efficiency and optimizing processes towards Data Integrity. Now with instrument control software, it is possible to achieve both simultaneously – optimize and maximize efficiency in the Lab and achieve data integrity. This workshop will demonstrate the comparison of the cost of a system that supports regulation compliance vs. the cost of non-compliance.

Session Learnings

Interact with a live system where the complete raw data/all meta data, is created, maintained and accessible on any system within the lab. A fully functioning system (not theory but a solution that has been installed globally for years using common and current best technology standards) that is designed according to the guidance of ALCOA based regulations.

- Data Governance: Data integrity and Data Quality from the data source

- SOP management optimized on a bench top instrument

- Meta data from the start of the lifecycle to all informatics systems

- Simplify integrated instruments and software systems

- Minimize the total cost of ownership of an integrated lab

- Lower implementation costs

- Less expensive to validate a complete system vs. each instrument

- Vendor maintained instrument integration without programming

- From instrument level software up to LIMS and cloud storage